Semantics at the heart of Generative AI

Table of contents

Last year, Google explicitly announced that its search engine would now be enriched with Generative AI. Microsoft Bing made no secret either, as Copilot is already enhancing all of your searches. Meanwhile, OpenAI is keeping pace: the organization recently decided to release its new SearchGPT prototype. The results are clear, straightforward, and users can interact with it as they would in a conversation with ChatGPT.

How can this new engine hope to compete with the two giants already dominating the field? OpenAI's confidence stems, in part, from its more advanced use of underlying technology: semantic search.

At the core of Generative AI, this innovation is gradually becoming integral to all technologies associated with natural language.

How does it work, and how can we harness it?

What is semantics?

Semantics refers to the underlying concepts—more or less abstract—behind a word or phrase. It helps address questions about words, such as their structure, associations, and meanings. In essence, semantics represents all the information that can be conveyed through a word or group of words. The computational understanding and translation of this concept have made technologies like Large Language Models (LLMs) possible.

How is a word translated in computing?

Tokenization

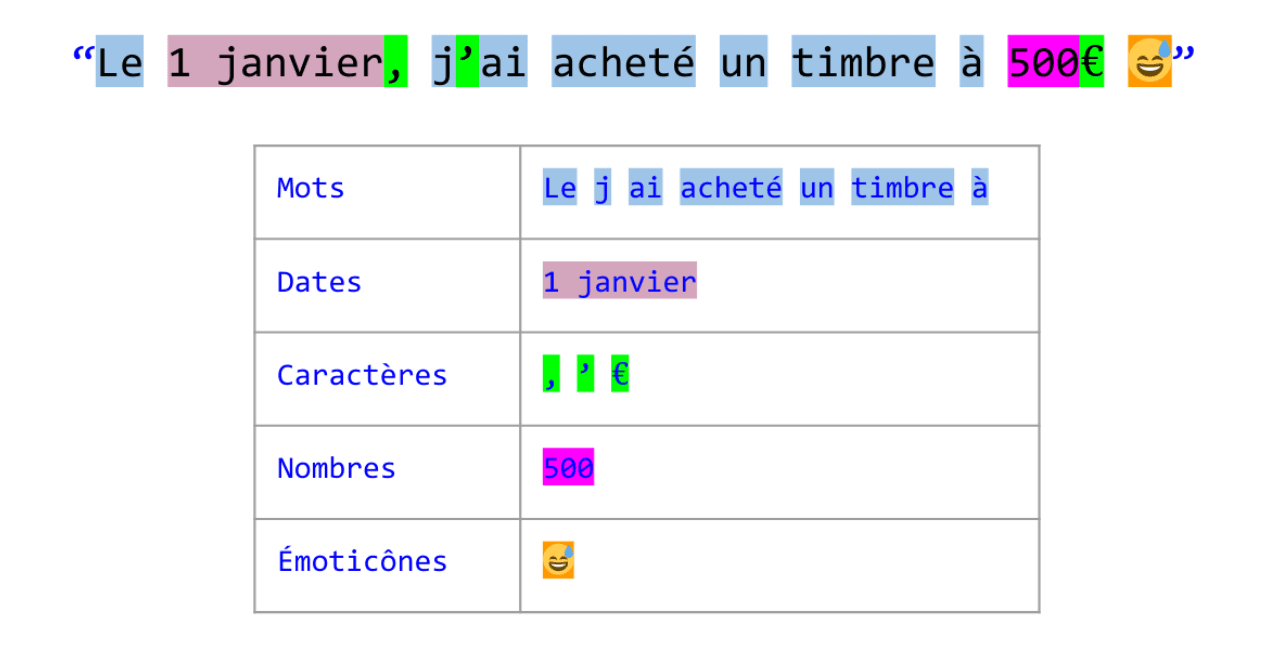

A machine (and by extension, artificial intelligence) isn't human; it only understands numbers. Therefore, the first step is converting the word into a number that the machine can interpret. This step is called "tokenization". Using a vast conversion dictionary, we assign a "number" to each group of characters, called a "token." It's not just words! Dates, special characters, numbers, emojis—everything needs to be "tokenized".

For example, in this extensive conversion dictionary, the word "bought" might be assigned the value "42368," and the emoji "😅" the value "784235". The goal is to match an "identifier" to each character group, simplifying the AI's training process (using neural networks). This phase effectively breaks down our text into small semantic entities.

Embedding

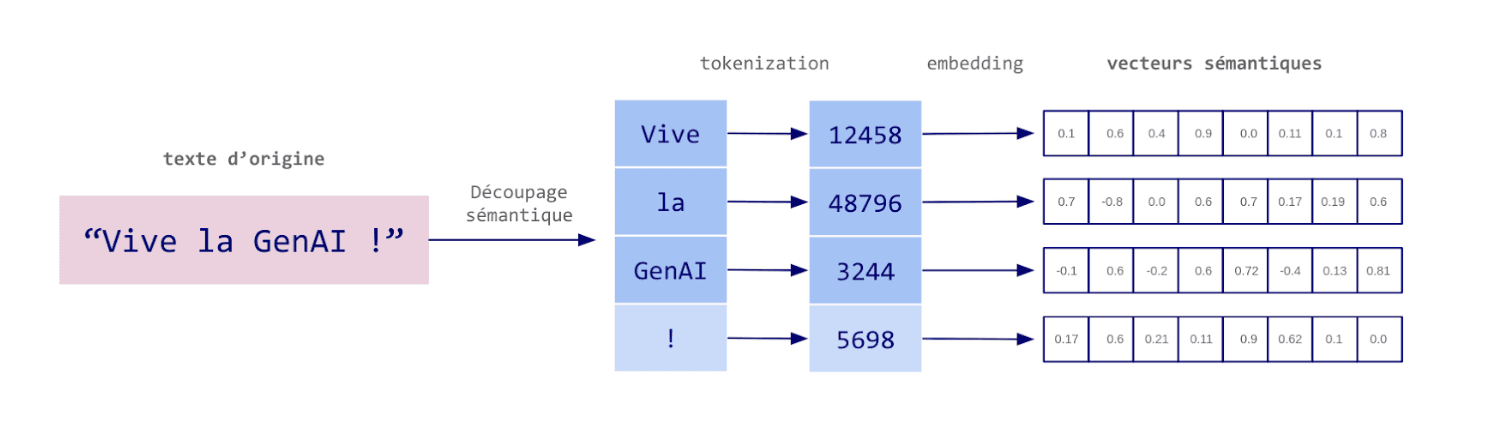

Once our text is converted into tokens, an algorithm (a neural network called an "Embedder") is used to transform them into vectors of numbers. Embedding (or semantic vectorization) creates a vector representation of a word. More precisely, it projects the word into a latent space. Let’s break down this concept. Take the sentence “Long live GenAI!” as an example:

After the transformation, we obtain a semantic vector for each token. This vector, a series of numbers, represents a position in a high-dimensional space.

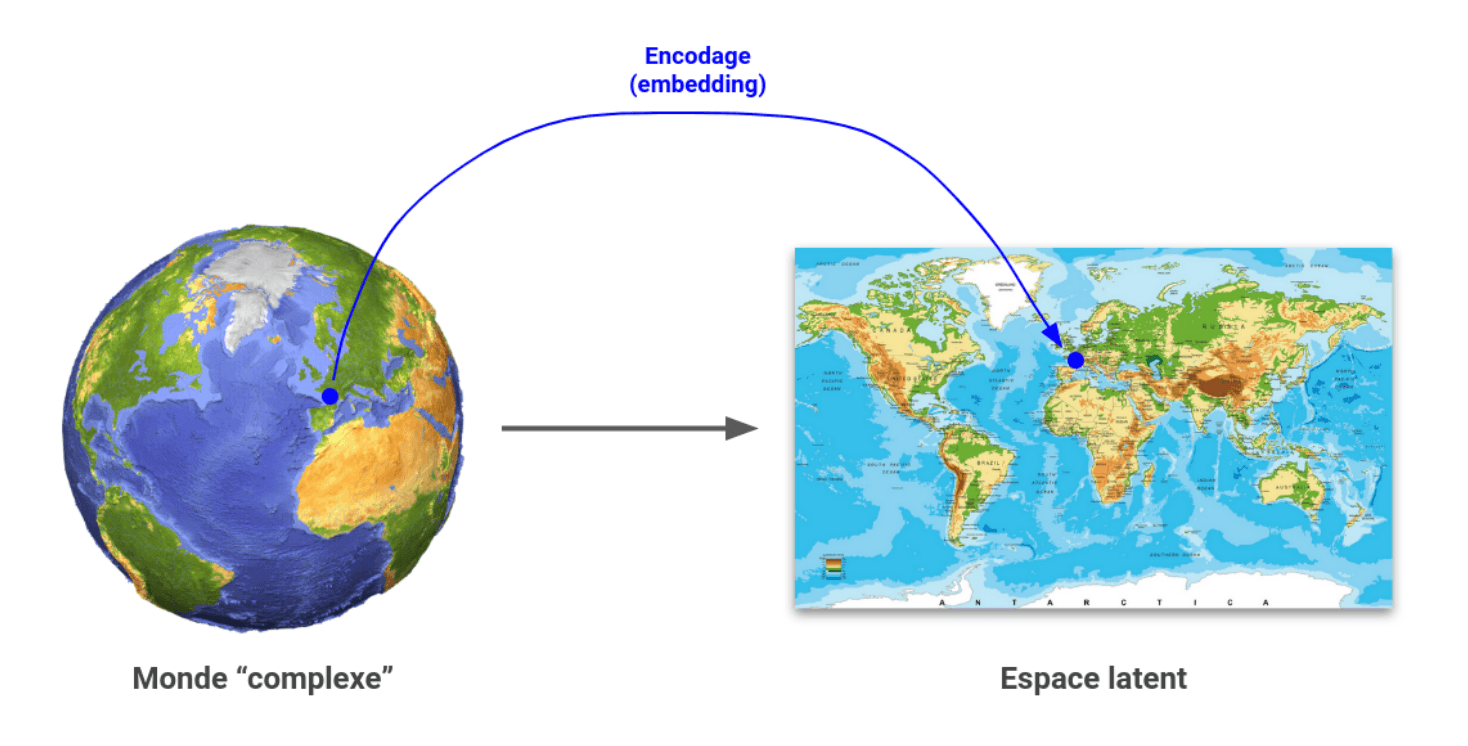

A latent space is a concept frequently used in artificial intelligence and mathematics. Imagine it as a space where points can be positioned. Its defining feature is that it’s built as a simplified representation of another, much more complex space.

To use an analogy, think of this space as a map. A map is a two-dimensional representation of a three-dimensional world: simplified yet rich enough to navigate effectively.

Similarly, the latent space we construct (for semantics) is a simplified representation of the complexity of language. This multidimensional map allows AI to assign meaning to words and create combinations. This method of representing knowledge enables the machine to grasp nuances and complex relationships that would otherwise be challenging to model (such as with a simple list of words or definitions).

Embedding and its dimensions

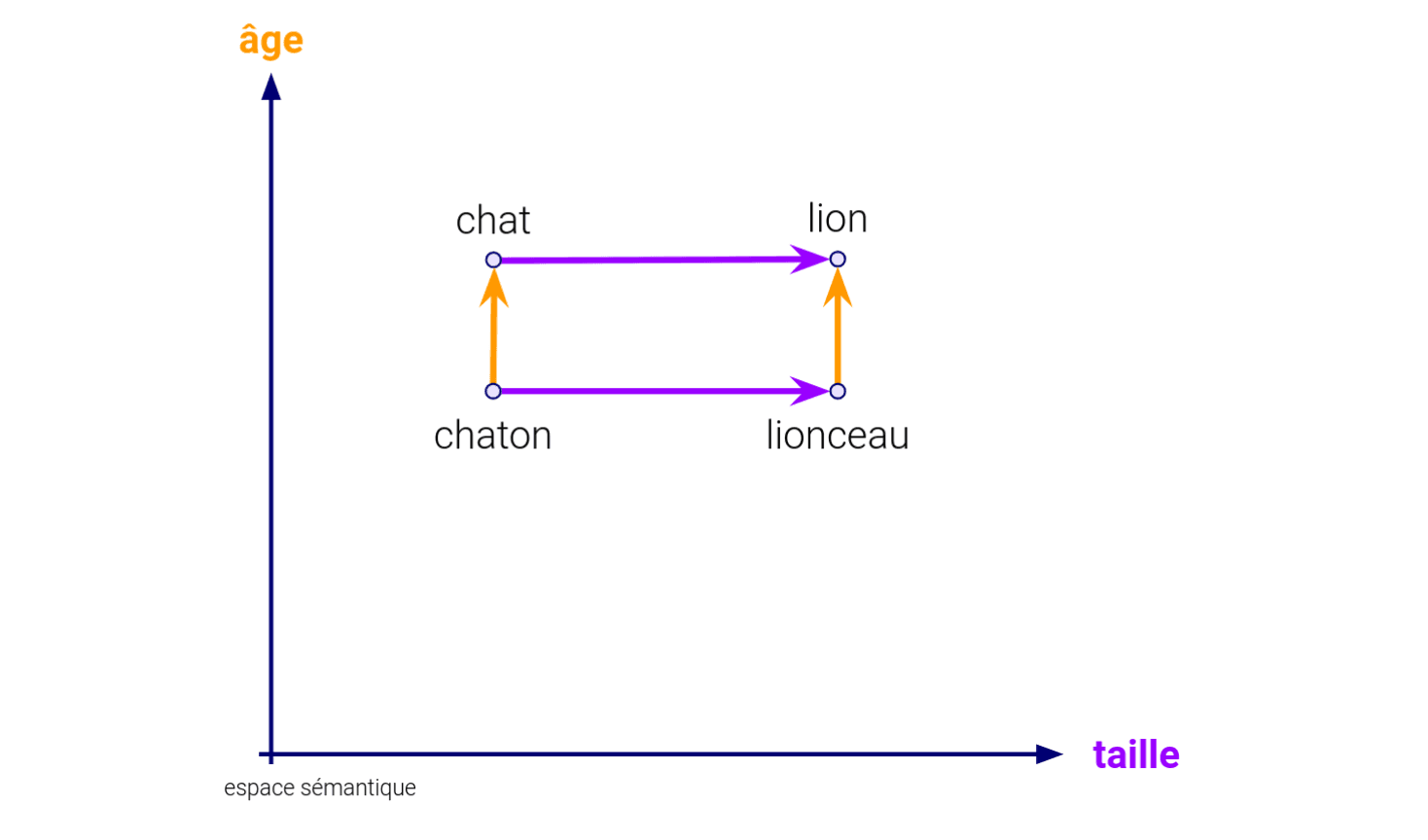

Each dimension (each number in the vector) represents a concept. These concepts are learned by the algorithm during its training, progressively understanding the relationships between words and texts. To illustrate, let’s consider a relatively simple two-dimensional space. Arbitrarily, we’ll call the first dimension "age" and the second "size". Now, we generate embeddings for the words "cat," "kitten," "lion," and "cub" within these two dimensions and plot them on a graph.

You’ll notice the semantic relationships they share:

Age dimension : Kitten is to Cat what Cub is to Lion

Size dimension : Cat is to Lion what Kitten is to Cub

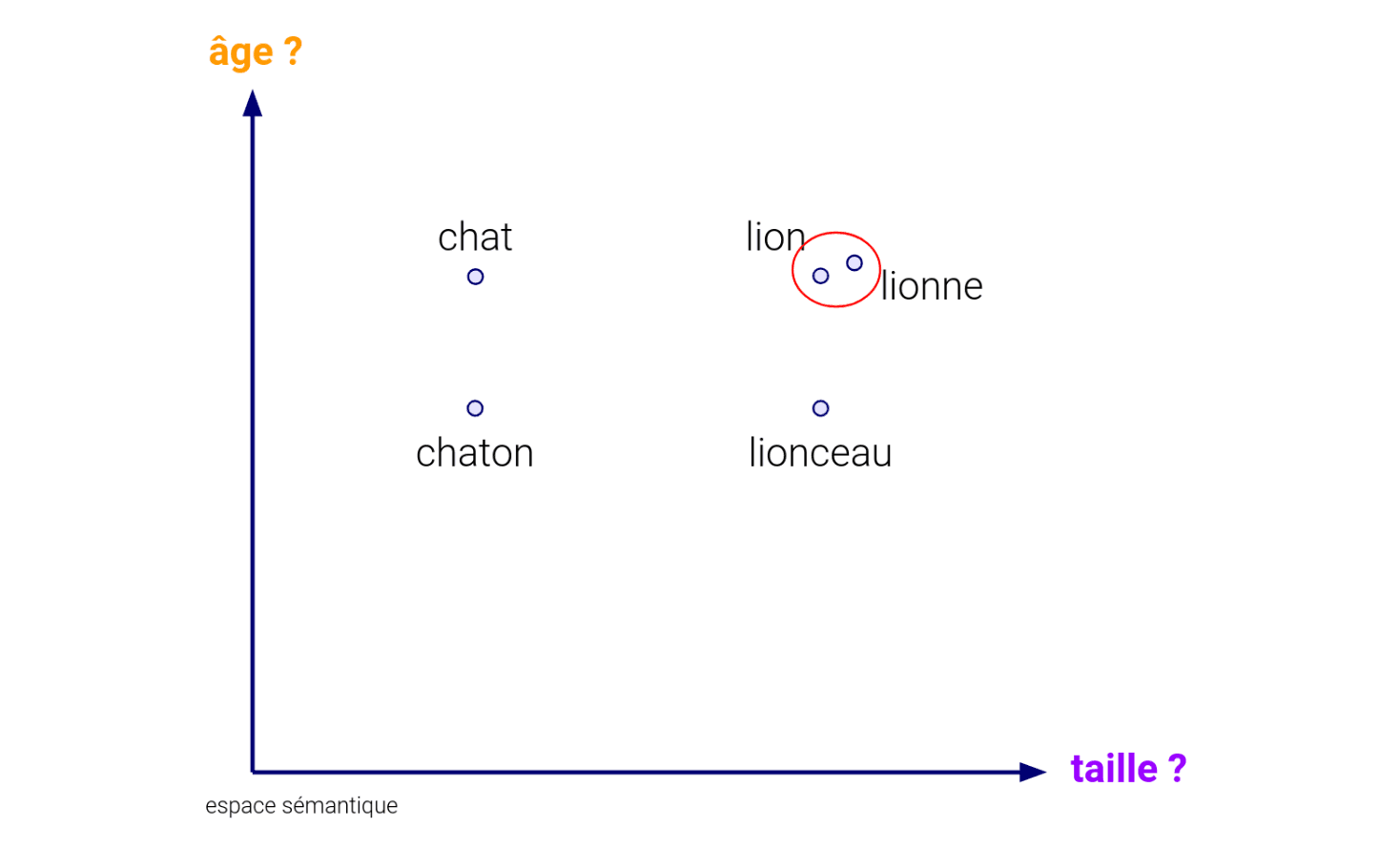

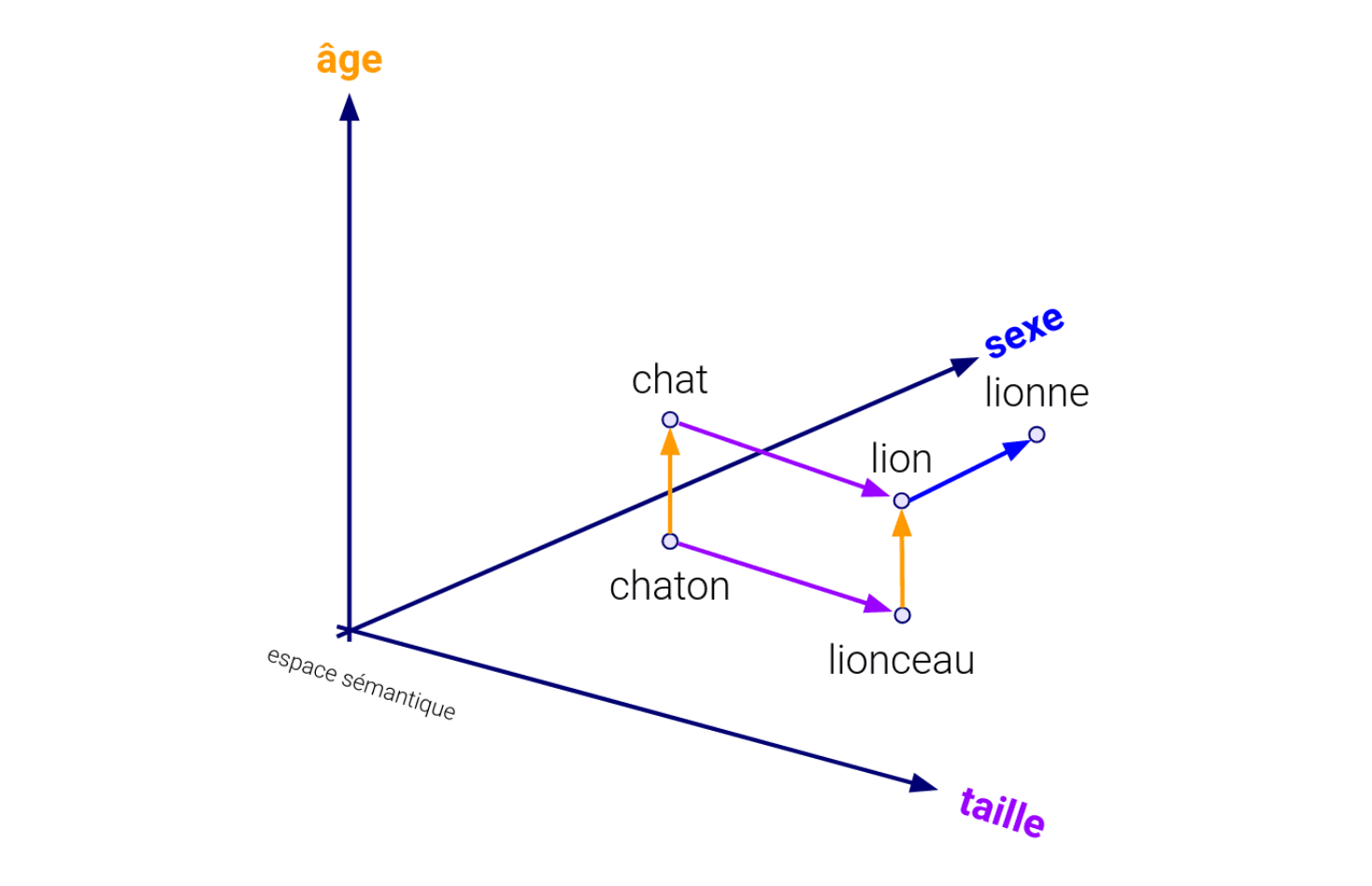

In other words, each dimension of our vector represents a concept that establishes relationships between vectors, and consequently, between texts. This leads to another critical notion: the proximity between vectors translates to a semantic closeness between texts. If we added "lioness" to the space, its embedding would be very close to "lion."

In semantic space, two closely related embeddings share very similar values across their dimensions (logically). This is where we utilize mathematical tools associated with vectors. How do we know that "lion" is close to "lioness"? By simply calculating the distance between the two vectors. Several methods exist (Euclidean distance, Cosine similarity, Manhattan geometry, etc.), all yielding a similar result: a number indicating how close the two vectors are (e.g., 0.001 means they are close, 0.895 means they are far apart). If we had added the dimension of gender, it would introduce a new distinguishing factor, affecting the distance function.

This process underlies Embedders and their ability to move a set of characters through a multidimensional space, called "latent space". These spaces don't just have two or three dimensions but often thousands! One of the most commonly used today, OpenAI’s text-embedding-large-3, operates in 3,072 dimensions. These are the necessary dimensions to capture the full semantic complexity of natural language.

Embedders can create embeddings for any text, regardless of length, as long as it fits within its maximum capacity: syllables, words, sentences, paragraphs, and so on. OpenAI’s text-embedding-large-3 can handle up to 8,191 tokens as input (a size determined by technical reasons), allowing it to abstract a vast amount of information. Naturally, the more tokens passed through the latent space, the more diffuse the resulting vector’s information. Therefore, the text length must be carefully considered for embedding based on the problem at hand.

How does an algorithm create an embedding?

Text embedding is not a new challenge. One of the most famous algorithms, Word2Vec, emerged in 2013 from Google’s research teams. Though it evolved over time, it’s seldom used today. Originally, it operated without neural networks and was quickly outpaced by new structures (BERT, ELMo), thanks to a 2017 innovation from Google: the Transformers.

Structure of Transformers

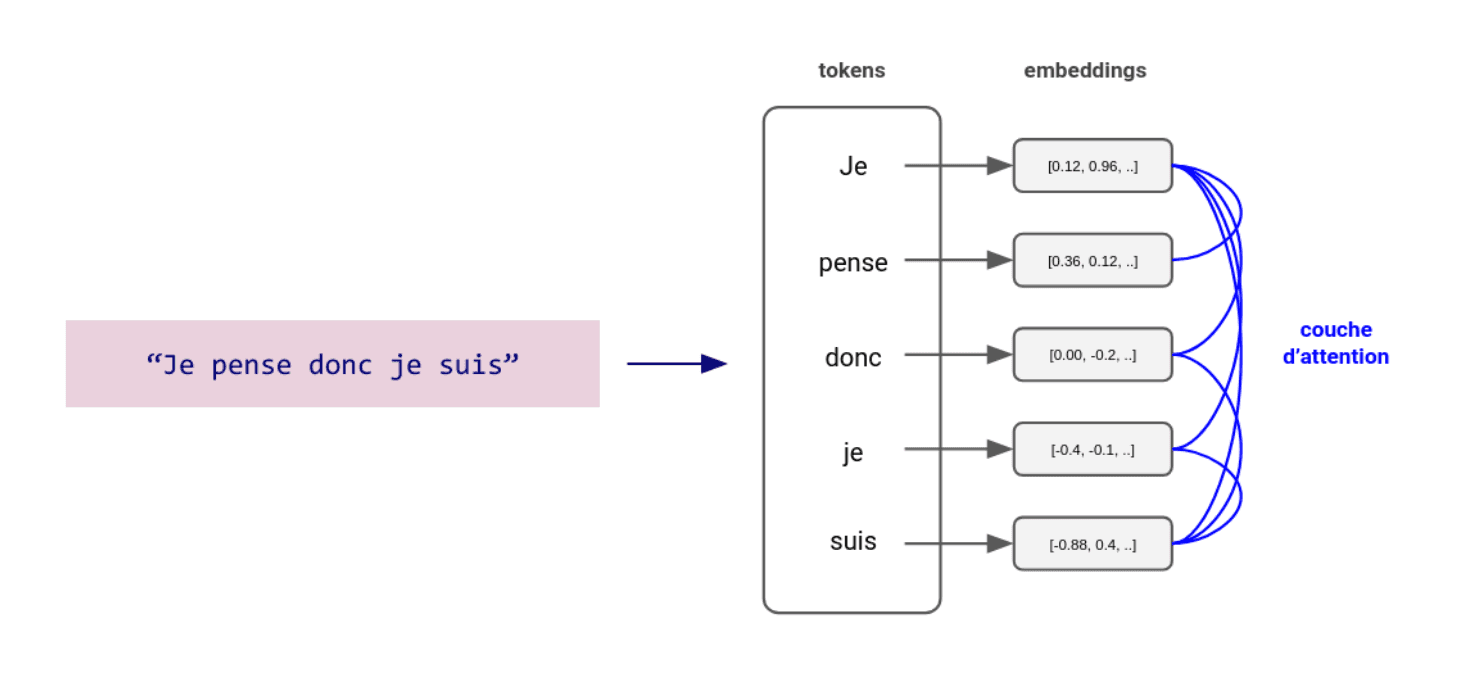

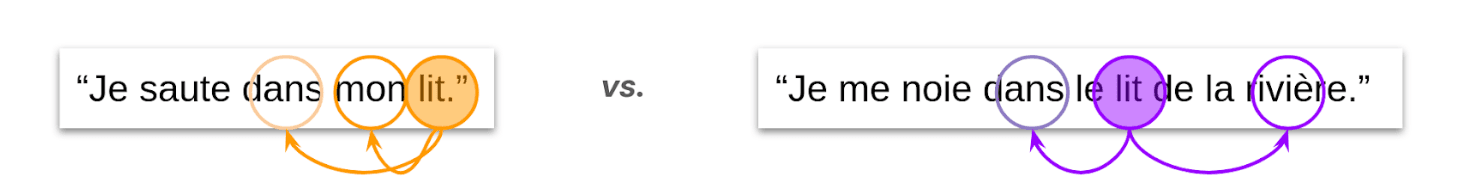

“Attention is all you need” is the title of a 2017 research paper from Google that introduced the Transformer architecture, showcasing a new approach to embedding. It proposed no longer treating each word independently but instead as part of a whole, such as a sentence. The reasoning is simple: context is crucial for understanding a sentence’s meaning. Each word (token) must be related to all others in the sentence to fully grasp its meaning.

The process works as follows:

The text is broken into tokens

The tokens are transformed into semantic vectors (embeddings)

The tokens are enriched with the context of surrounding tokens (attention layers)

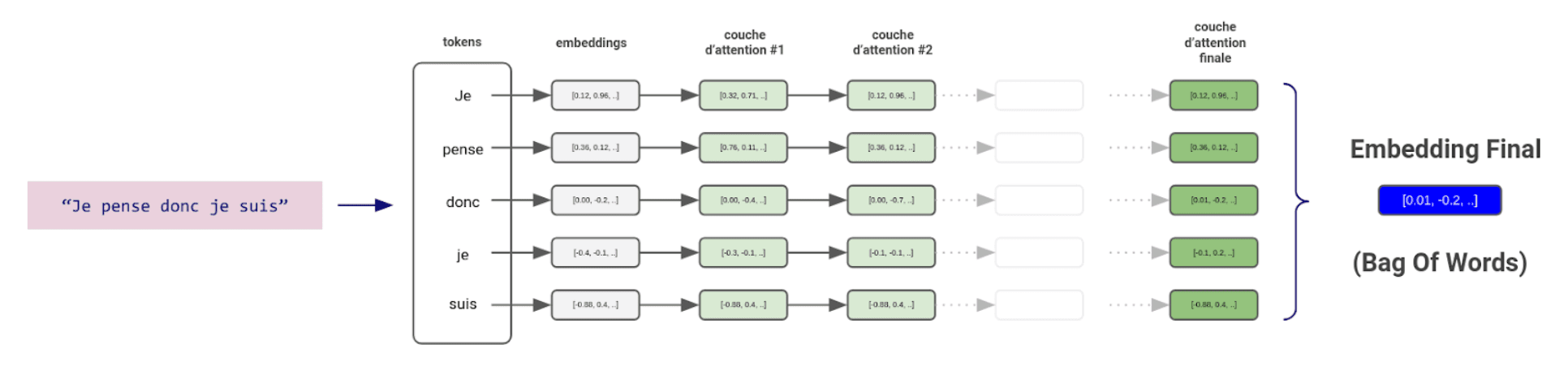

Transformers use a similar mechanism to neural networks, building abstractions through a stack of layers. Each attention layer modifies the semantic vectors. The goal is to move each embedding in latent space to ensure it carries both the information of its token and its surrounding context.

As illustrated, if we want to position the word "lit" in latent space, it gradually gains meaning based on the other words in the sentence. This movement of embeddings through layers happens by calculating weights based on token relationships, allowing the model to emphasize or reduce the influence of other tokens in the sentence.

The layers are chained (in large quantities) until reaching a final attention layer. From this, the semantic vector representing all tokens is derived. An early and classic way of constructing this was to take the "average" of the vectors, using an algorithm called Bag Of Words.

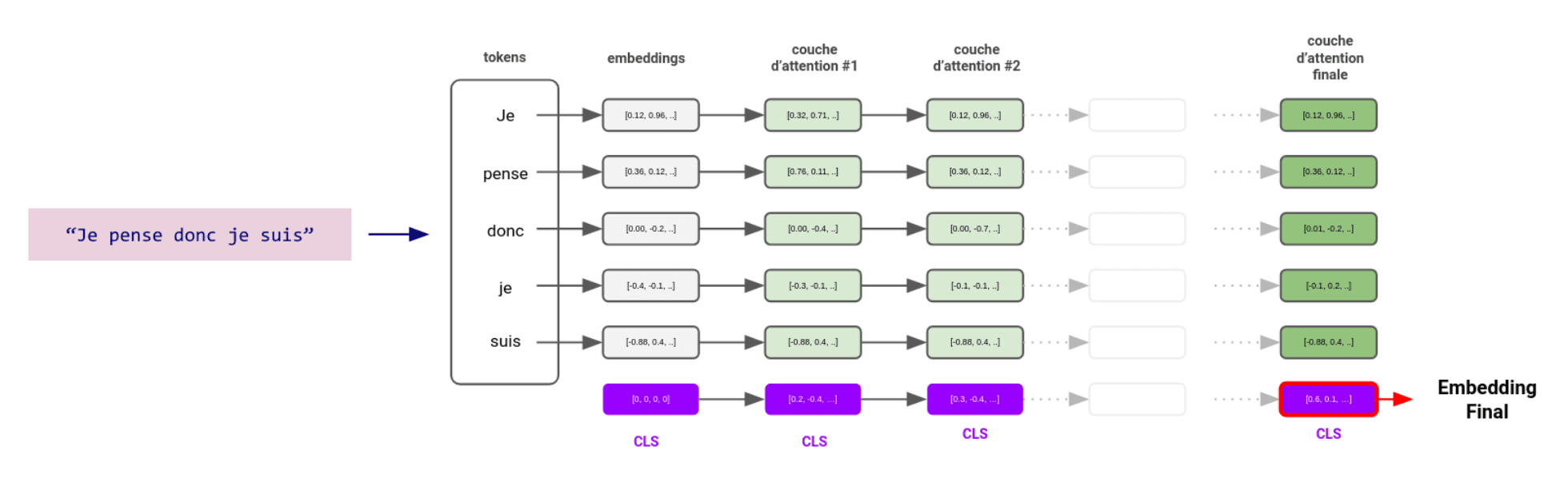

An improvement: the CLS Token

However, this method remains somewhat rudimentary, whereas the Transformer architecture seeks to leverage the power of neural networks to build knowledge. A more refined method has since been developed and is now the standard: the CLS Token. “CLS” stands for "classification," as this token helps the model classify the meaning of the text. The idea builds on the very process of neural network training. The CLS Token is treated as a neutral word (with an empty or random embedding vector) added to the end of the text. As part of the token set, it interconnects with all other embeddings and gains information from each attention layer. The goal is for it to progressively accumulate meaning as it passes through the layers until it reaches the final layer. At this point, it has absorbed all the information conveyed in the text. Its value represents the final embedding output from the embedder.

In Large Language Models (LLMs)

An LLM is a massive neural network. Like other neural networks, it consists of input layers that convert and enrich information, decision layers that interpret, and an output layer that renders the network’s decision. The embedder and the semantics we’ve discussed correspond to these input layers, transforming the information into a language the LLM understands to make decisions about the text it generates in response.

Conclusion

Embedding today is no longer limited to simple text. Training methods for these models have evolved to include images, videos, and audio. We can now integrate diverse data types into the same semantic space! This means that images and videos can be retrieved based on the concepts they convey. It also means that LLMs, now multimodal, can understand a wide variety of content. This evolution significantly expands the initial technology’s capabilities, allowing it to be applied to increasingly diverse and complex fields.

In our next article, we’ll explore how Generative AI and embeddings can be used in concrete applications (some of which are already implemented!).