Hosting - Part 3 : What the cloud does for us

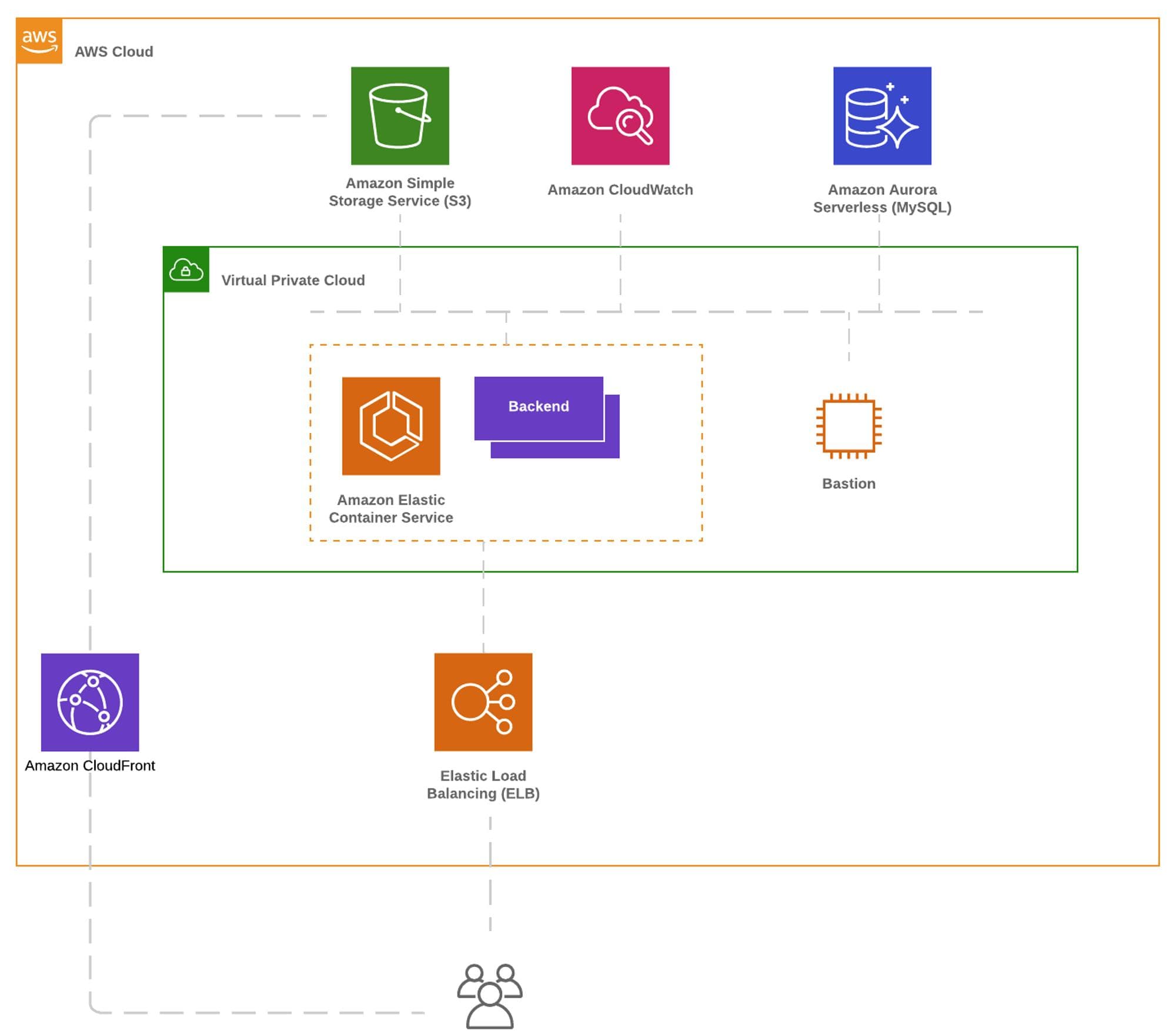

The main services used in our backends

At BeTomorrow, we usually work at the PaaS level. All our backends are different but they often share a common base that uses different AWS services. Of course, we don't use them all, AWS has more than 500 services. Let's look at this in more detail and especially what AWS brings us compared to simple IaaS.

ELB (Elastic Load Balancer)

When the mobile application makes a network request, it arrives at the load balancer (Load Balancer). This component will receive the requests and redirect them to our backends. Thanks to this component, we don't have to increase the machine's power, we can simply add or remove backend instances as needed depending on the number of requests. In addition, it also manages the encryption of requests. We no longer need to configure and renew certificates for HTTPS. This component is highly available and fully managed by AWS.

ECS (Elastic Container Service)

We've already talked about it a bit, It's the CaaS system. It allows us to deploy our backends directly in the form of a Docker image. We just have to tell it where the image we want to deploy is located and it does the rest. Coupled with the Load Balancer, it also offers "Rolling Update". That is, when we are going to deploy a new version, the system will start the new backends in parallel with the old ones, wait for them to be operational, then redirect traffic to these new instances before removing the old ones. We can therefore deploy our backends without interruption of service without having to do anything on our part.

S3 (Simple Storage Service)

S3 is the object storage service. When we upload a photo, an avatar for example, it is stored here. Apart from human error, it is almost impossible to lose an object that is on S3. To understand how AWS achieves this feat, we must look at how AWS has set up its infrastructure.

AWS has set up a certain hierarchy:

Region: At the first level are the Regions. These are extended geographical areas that are found in several countries. For example, in France there is the Paris region, in England there is the Ireland and London region

Availability Zone: In each region we find Availability Zones, AZs. There are usually 3 per Region. They can be seen as subdivisions spaced at least 100 km apart from each other.

DataCenter: Finally, in each AZ we find several DataCenters spaced several tens of meters apart. They are like houses in the subdivisions, in which we will find the servers.

It's far from OVH DataCenter that look a lot like a set of recycled shipping containers stacked on top of each other.

When a file is placed on the S3 service, it will go to a DataCenter located in an AZ of the region that we have chosen. Initially, AWS will create 2 copies in the same AZ and then send a copy to another AZ in the region, which in turn will be copied 2 times. We therefore have 6 copies spread over 2 AZs in the region.

Aurora MySQL

Aurora MySQL is a database created by AWS and compatible with the still very popular MySQL database. With this service, we therefore benefit from a MySQL-compatible database that performs even better when we have many requests at the same time and deploys with a single click.

In the minimum offer from AWS, this database will be deployed in a single data center in one of the availability zones of the chosen region. However, each time there is a change in the database, it will save this information on S3 which will copy it to another AZ. We can therefore quickly restart a new up-to-date database in another AZ in case of failure of the first one without any additional cost. Of course, we can go further by having several databases running in parallel and letting Aurora manage to switch to a copy in case of a cut in the main one without even interrupting the service.

CloudWatch

To help us better understand what's happening in the code when we encounter a bug, we need to log different events. For example, that we have passed through this function or that here the service has responded that it could not find the file, etc. But above all, we log all the information we can when an error occurs. As a result, when our backends are running on multiple servers, we need to retrieve these logs from all servers and merge them to have a temporal reading. CloudWatch centralizes all these logs for us and offers us this unified view where we can go back in time to better understand what happened. It's also here that we'll be able to find a lot of measures on the overall health of our platform.

CloudFront (Content Delivery Network)

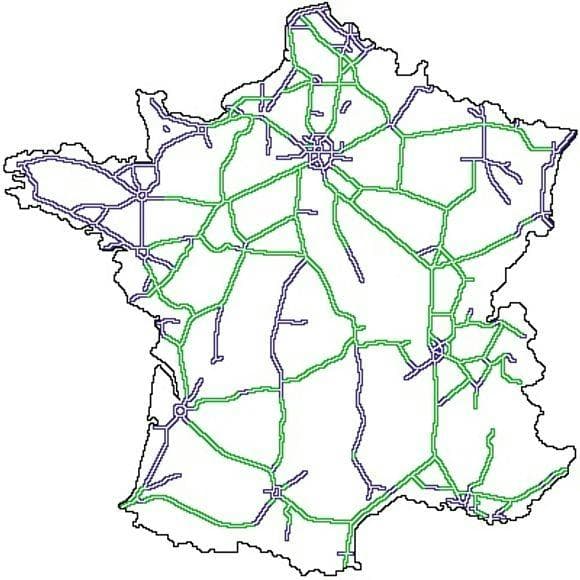

When we are on the internet, our requests pass through what is called the "public internet." The problem is that potentially this network is overloaded or has work that forces us to make detours and increases the response time. It's a bit like the national roads in France.

In parallel to this network, providers deploy their own network that they master and dimension perfectly. We can see these networks as highways. To go faster, it is better to find the nearest toll to enter these highways. CloudFront is a bit like the toll of AWS highways. It allows us to enter the AWS network through the nearest door based on our geographic location.

In addition to that, CloudFront can also keep a copy of the response locally at this door. So if another user uses this door again, the request won't even go to our servers and will be returned directly.

This allows us to have a website that loads very quickly from anywhere in the world.

Most of the services listed above also exist at OVH for example, in more or less finished versions. But projects often lead us to use other services depending on needs such as CloudSearch for indexing, SQS for queue management, Lambda for processing in response to events and many others that do not have their counterpart at OVH for the moment.

And what about Serverless in this?

Literally, Serverless means that we don't have to worry about the machine that runs a service. In this way, the different services listed above are all Serverless or also exist in Serverless version. At best, we have given an idea of the necessary capacity to make our backend work but nothing more. Behind, it is the provider who will share its different machines and deploy our backend where there is still enough space.

Today, people generally confuse FaaS and Serverless. In theory, it's magical, it allows payment only when there are requests to save infrastructure costs, and the load supported adapts automatically with the number of requests. In practice, it's not that simple and it brings a whole bunch of complications that we will see in another article.

Conclusion

We see here that the cloud allows us to go much further than simple hosting and to save both time during the implementation but also during operation while having a more reliable and more secure. But what is the impact on the cost of hosting? This is what I suggest you see in a dedicated article to come.